When auditing websites, I often see the same picture: a beautiful design and quality content, but under the hood, there’s chaos. The site’s structure resembles a city built without a plan, full of dead-end streets, abandoned neighborhoods, and constant traffic jams on the main avenues. In the world of SEO, this leads to wasted link equity, poor indexation of key pages, and consequently, lost revenue.

The goal of this article is to be your practical guide to “urban planning” for your website. We won't just juggle complex formulas. Instead, using simple analogies and step-by-step instructions, I'll explain how to audit your current structure, design an effective new one, and implement it—transforming your site from a confusing labyrinth into a model metropolis that’s clear to both users and search engines.

The Fundamental Laws of Architecture

Before you can build, you have to understand the laws of physics. In SEO architecture, there are two: PageRank and Crawl Budget.

PageRank (Link Equity)

The Water Pipe Analogy

Imagine your website is a system of pipes, and PageRank is water.

- The water source is all the external links pointing to your site. They pour “water” into the system.

- The widest pipes are your most authoritative pages (most often, the homepage). The most “water” flows through them.

- Every internal link is a pipe that redirects the flow of water to another page.

The problem with most websites is that their pipes are leaking in all the wrong places. For example, by placing dozens of links on the homepage to unimportant pages (“About Us,” “Careers,” “Privacy Policy”), they direct the most powerful streams of authority to pages that don't generate revenue and aren't meant to rank for competitive queries. Our task is to learn how to direct this “water” to the pages that should be driving traffic and making money.

How It Really Works: Deconstructing the PageRank Formula

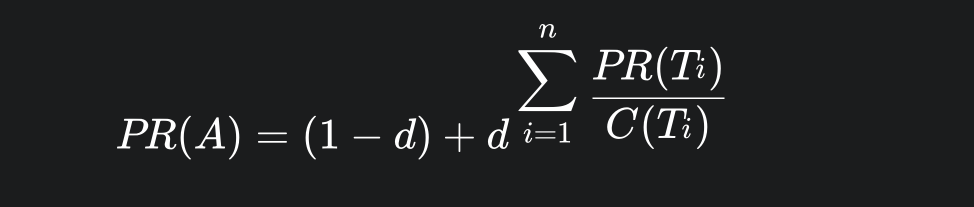

While the water analogy is a great illustration, a mathematical model lies at its core. The classic formula looks like this:

Don't be intimidated; I'll break it down.

PR(A)is the PageRank of page A, which is what we want to calculate.dis the “damping factor,” typically set to 0.85. Think of it as the probability that a user will continue clicking links rather than leaving the site. The(1-d)or 15% represents a base value distributed across all pages.T_irepresents each page that links to our page A.PR(T_i)is the PageRank of each of those linking pages.C(T_i)is the most important part for us in practice: the total number of outgoing links on each pageT_i.

What's the value here? You don't need to calculate this by hand. The value is in understanding the fraction: PR(T_i) / C(T_i). It clearly shows that the equity your page A receives is diluted by the total number of links on the donor page. A link from a powerful page with only 5 outgoing links is worth far more than a link from the same page if it had 100 outgoing links. Understanding this formula is the mathematical justification for removing unnecessary links from your key pages.

Crawl Budget: Google's Attention Span

Googlebot will not crawl your site infinitely. For every site, it allocates a certain amount of resources—the crawl budget. Think of it as a credit limit.

If your site has thousands of “junk” pages (expired promotions, duplicates, faceted navigation URLs), the bot will spend its entire budget on them and may never get to your new, important articles or product pages. Effective architecture is how you tell Google: “Look, here are my most important pages. Please spend your budget on them, and you can ignore this other junk.”

The Architectural Audit: A Step-by-Step Guide to Finding Flaws

In this section, I'll show you the exact steps I use in my work. We'll need a tool called Screaming Frog SEO Spider (the free version for up to 500 URLs may be sufficient for smaller sites).

Step 1: Finding Deeply Buried Pages

Run a crawl of your site. Once it's complete, navigate to the “Crawl Depth” tab. This report shows how many clicks from the homepage it takes to reach each page.

- The Rule: All commercially important pages (services, product categories) should be at a depth of no more than 3 clicks. If you see key pages at a depth of 4, 5, or more, that’s a red flag. Google considers them less important and will crawl them less frequently.

Step 2: Discovering Orphan Pages

Orphan pages are pages that have no internal links pointing to them. Users can't find them through navigation, and search engines, even if they know the pages exist, consider them unimportant.

- How to find them: This requires connecting Screaming Frog to your Google Analytics and Google Search Console data. In the settings (

Configuration -> API Access), connect your accounts. After the crawl, the “Orphan Pages” report will show you pages that receive organic traffic (so Google knows about them) but have no internal links.

Step 3: Visualizing Your Structure with Gephi

The most insightful way to understand your site's problems is to see them.

- Export: In Screaming Frog, go to

Bulk Export→All Inlinks. This gives you a CSV file of all internal links. - Import: Open Gephi (it's free, open-source software), create a new project, and import the CSV file in the

Data Laboratoryas an “Edges table.” - Analyze: After running a layout algorithm like ForceAtlas2, what was thousands of rows of data becomes an interactive map. Here’s what to look for:

- Islands: Isolated clusters of nodes (pages) that are tightly interlinked but poorly connected to the rest of the site. These are your inefficient silos.

- Comets: Long “tails” of nodes. These are often paginated series or blog posts in a chain with no cross-linking—dead ends for crawlers.

- Gravity Centers: Run the built-in PageRank calculation in Gephi to make nodes with higher authority appear larger. You'll instantly see which pages are the true centers of gravity on your site.

Design Blueprints: From Silos to Thematic Clusters

Now that we know the problems, it's time to design the solution.

The Classic Silo Structure

This is a strict hierarchical model, like folders on a computer (e.g., site.com/services/seo/seo-audit). The downside is that it isolates topics, hindering link equity flow and the ability to build broad topical authority.

The Modern Approach: Topic Clusters

This is the model I recommend for most content-driven websites. It allows you to become the “Wikipedia” of your niche.

- Pillar Page: One monumental, comprehensive article that broadly covers a large topic (e.g., this article on Site Architecture).

- Cluster Content: Several narrower articles that delve into the subtopics of the pillar in great detail (e.g., “How to Find Orphan Pages,” “Log File Analysis for SEO,” etc.).

- The Linking Rule: All cluster content articles must link up to the pillar page. The pillar page can then link out to the most important cluster articles.

The result is a powerful, semantically related group of content. This demonstrates to Google that you have deep expertise on a subject, which is the foundation of building Topical Authority.

The Art of Interlinking & Modeling Authority Flow

This is the implementation phase, where we move from intuition to data-driven decisions.

Rules for Effective Interlinking

- Link from Power to Priority: Identify the top 10-15 most authoritative pages on your site (using Screaming Frog or an Ahrefs/Semrush report). Ensure these pages contain contextual links pointing to your most important commercial pages.

- Context is King: A link from within a paragraph, surrounded by relevant text, passes far more weight and context than a link from a generic menu or footer.

- Use Smart Anchor Text: Don't use the same anchor text for different pages. The anchor should accurately describe the page it's linking to. Use meaningful, keyword-rich phrases instead of “click here” or “read more.”

Modeling the Flow: From Intuition to Data

Advanced interlinking relies on probabilistic models, similar to Markov chains, which predict the path a “random surfer” (or crawler) would take through your site. The practical application: Your goal is to make links to your most important pages the most “probable” clicks. Place them higher in the content and give them more visual prominence.For the Pros: For large websites, you can use Python (with libraries like NetworkX and Pandas) to build a graph of your site and calculate the internal PageRank of every page. This allows you to see, based on data, which pages are hoarding authority and which are starved, so you can adjust your linking strategy accordingly.

Conclusion and Action Plan

Working on site architecture isn't a one-time project; it's an ongoing process. But it's what separates average sites from the leaders in their niche. To get started, I suggest a simple action plan:

- Crawl your website with Screaming Frog.

- Identify 5-10 important pages with a crawl depth greater than 3.

- Visualize the structure with Gephi and look for isolated clusters.

- Choose one core business topic and start designing your first topic cluster.

- Fix the internal links on your top 5 most authoritative pages to ensure they point to your most valuable pages.

By taking these first steps, you'll lay the foundation for a more effective and powerful site structure.